Datanommer / Datagrepper

Datanommer

Reads-in messages from the bus

Stores them into the database

Datagrepper

Exposes the messages in the database via an API with different filtering capacity

Investigation

Here is the list of ideas/things we looked at:

Conclusions

We have investigated different ways to improve the database storing our 180 millions messages. While we considered looking at the datagrepper application itself as well, we considered that replacing datagrepper with another application would have too large consequences. We have a number of applications in our realm that rely on datagrepper’s API and there is an unknown number of applications outside our realm that make use of it as well. Breaking all of these applications is a non-goal for us. For this reason we focused on postgresql first.

We looked at different solutions, starting with manually partitioning on year, then on

id (not msg_id, the primary key field id which is an integer). We then

looked at using the postgresql plugin timescaledb and finally we looked at using this

plugin together with a database model change where the relation tables are merged into

the main messages table and their is stored using arrays.

Based on our investigations, our recommendation is to migrate the postgresql database to

use the timescaledb plugin and configure datagrepper to have a default delta value via

DEFAULT_QUERY_DELTA.

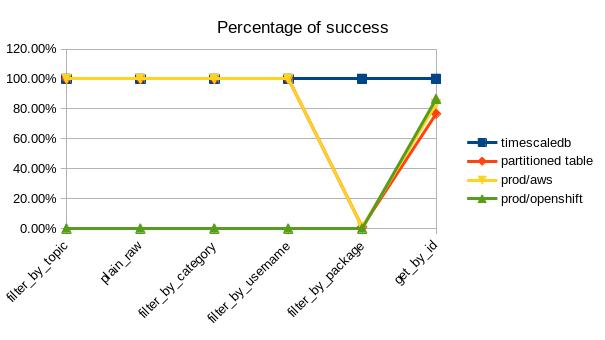

As a picture is worth a thousand words:

We checked, setting a DEFAULT_QUERY_DELTA alone provides already some performance

gain, using timescaledb with DEFAULT_QUERY_DELTA provide the most gain but using

timescaledb without DEFAULT_QUERY_DELTA brings back the time out issues we are

seeing today when datagrepper is queried without a specified delta value.

We also believe that the performance gain observed with timescaledb could be

reproduced if we were to do the partitioning ourself on the timestamp field of the

messages table. However, it would mean that we have to manually maintain that

partitioning, take care of creating the new partitions as needed and so on, while

timescaledb provides all of this for us automatically, thus simplifying the long term

maintenance of that database.

Proposed roadmap

We propose the following roadmap to improve datanommer and datagrepper:

0/ Announce the upcoming API breakage and outage of datagrepper

Be loud about the upcoming changes and explain how the API breakage can be mitigated.

1/ Port datanommer to fedora-messaging and openshift

This will ensure that there are no duplicate messages are saved in the database (cf our ref:timescaledb_findings). It will also provide a way to store the messages while datagrepper is being upgraded (which will require an outage). Using lazy queues in rabbitmq may be a way to store the high number of messages that will pile up during the outage window (which will be over 24h).

Rabbitmq lazy queues: https://www.rabbitmq.com/lazy-queues.html

2/ Port datagrepper to timescaledb.

This will improve the performance of the UI. Thanks to rabbitmq, no messages will be lost, they will only show up in datagrepper at the end of the outage and with a delayed timestamp.

3/ Configure datagrepper to have a DEFAULT_QUERY_DELTA.

This will simply bound a number of queries which otherwise run slow and lead to timeouts at the application level.

4/ Port datagrepper to openshift

This will make it easier to maintain and/or scale as needed.

5/ Port datagrepper to fedora-messaging

This will allow to make use of the fedora-messaging schemas provided by the applications instead of relying on fedmsg_meta_fedora_infrastructure.